Arteïa's perspective on the Art Identification Standard initiative - part 3.

In the two previous essays (Part 1, Part 2), we explored themes revolving around the asymmetric ecosystem of art and forming of the Art Identification Standard to address some of its looming challenges. Information asymmetry is certainly a tough nut to crack, especially considering the ever-changing dynamics of the modern art market and its riveting business actors. In the digital age of today the data is everywhere, and so is data pollution, which when left unattended, slows the progress of companies that rely on efficient data economies and makes it harder for everyone to derive value from available information.

Creating digital representations of art objects to a global system that acts as an independently verifiable, immutable source of truth opens the possibilities of non-zero-sum game business models, where companies that usually compete with each other aim to expand the size of the art market (and increase profit margins) through collaboration and mutually beneficial goals. Often at the very core of organisational prowess lies a powerful technical solution that goes beyond the constraints of today with a set of ambitious goals to change tomorrow. Most progress rises from innovation or crisis. Today, we face both those triggers. The novelty in persisting art-related information in the digital space is rooted in a new way of thinking about identifiers for unique objects (or unique classes of objects), henceforth leveraging the very latest advances in information science.

Exploring universally unique identifiers

The world is random. Well, maybe it is, maybe it isn’t. Stepping back from trying to dissect what mathematics and philosophy are telling us about the reality we thrive in (and the art we cherish), randomness (or pseudorandomness, which is an inherent property of every Turing machine that does not rely on an external source of entropy) lies at the heart of every computing mechanism and technique that deals with processing information.

Working under an entry assumption that the global digital data repository for art objects should be as lightweight and as unobtrusive as possible to create and assign an id to the object, the first thing that comes to mind is a simple system tagging objects with one of the universally unique identifier variants that are ubiquitous across a raft of programming languages and operating systems. UUID’s are of fixed 128-bit size, up to 36 characters long (up to 32 without dashes) and generated with just enough entropy (derived from hardware interrupts, mouse movements, disk activity or other sources) to lower the chance of collision (two objects sharing the same identifier) to a negligible amount, which generally remains true even in distributed computing. The only exception to this is mobile devices that are practically considered to be low trust hardware with their (pseudo) random capabilities being insecure and prone to attacks.

77120652-cca9-4c60-bacc-6a9c4628ff10

The disadvantages of using UUID in data stores are noticeable in plain sight: their size (and performance overhead that goes along with it), problematic ordering, records inserted at random due to the absence of sequentiality, cumbersome debugging, and a few more. Not short on imperfections, universally unique identifiers have been successfully used for decades in the vast majority of computer systems as a smallest atomic unit to map information, tag objects, or identify digital abstractions across multiple types of data stores. However, being not context-aware, UUIDs are highly dependent on the decisions of the system designer and business stakeholders, who leverage them to satisfy a very specific and narrowly defined set of requirements. While effective at representing data, they work within walled gardens of their pre-defined environments and are unable to carry the object context and model the actions that created them in the first place in a decentralised and independently verifiable way.

Pointing at silos

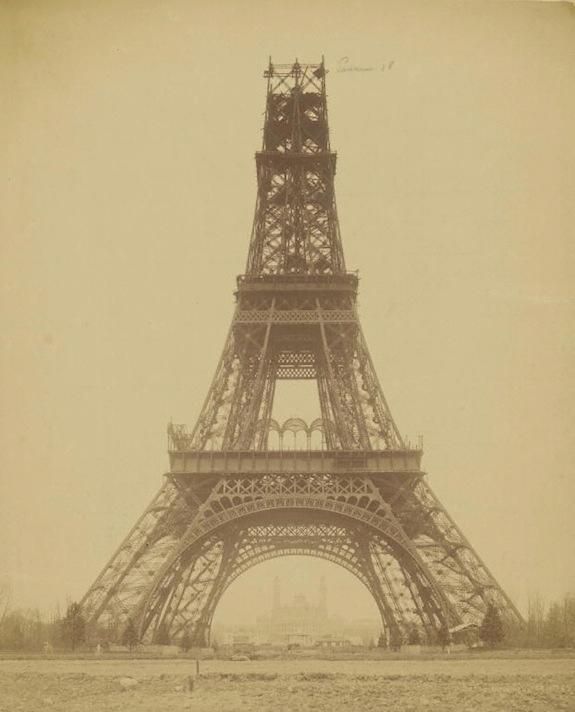

If one were to try today to create and assign a unique, global, digital identifier to Guernica (a magnificent, colorless painting by Pablo Picasso that depicts the tragedies of war and the unexpected loss of innocence) we would probably end up having a seemingly random set of alphanumeric characters ready to be used inside a database system. Unless we take additional steps and assign the freshly generated alphanumerics (or hashes) to a data entity that provides further context, we are not getting much value from creating the unique identifier itself. Taking these additional steps results in creating a multi-layered software to record and maintain art data, with a lot of (both internal and external) software dependencies in place. It is an unverified and non-publicly auditable source of truth to work with. If a company decides to build software that aims to act as a provenance database for Picasso artworks it will probably end up building yet another information silo, as indistinguishable and as unverifiable as the other silos available on the market. In more vibrant words, the provenance of a Picasso artwork is as good as the company that built the software to keep the data claims it to be. A Picasso painting originating from a single information silo and traversing along a value chain is interacting with multiple actors that have no means to independently verify its provenance or whereabouts. In case of sale, this requires an additional set of services and checks and adds considerable risk, cost and bureaucracy to the process. Information silos are also often subject to security breaches and information loss. Nowadays, a set of new, decentralised solutions is fiercely competing with this paradigm of the past, trying to give back the power of ownership over information to individuals.

Thinking in NFTs (non-fungible tokens) - craze and limitations

Non-fungible tokens took the blockchain world by storm in 2017 beginning with a cryptophunk movement, which introduced digital assets as collectibles. Fungible means a thing that can be replaced by an equivalent, whereas the non-fungible token design requires uniqueness. Providing a standard for representing scarcity and persistence on the blockchain (in this case, ERC-721), NFTs quickly moved from representing digital collectibles to other objects such as tickets, contractual documents, or artworks. NFTs are designed from the ground to be manageable, ownable, and tradeable, providing a common interface to use for blockchain developers. They share, however, a certain set of features that make them less convincing to use in a generally accepted, more universal, and independent scenario.

Most successful and secure implementations of non-fungible digital assets are available on the Ethereum network, which is a type of public blockchain driven by a proof-of-work consensus algorithm. Ethereum works in favour of openness and decentralisation but is relying on public miners (or in the case of Ethereum 2.0, cryptocurrency stakers) to secure the state of the network. Using Ethereum to build anything but a prototype of a global art object repository carries the question of eventual consistency and brings the cost of ether (and its volatility) to the picture.

Beyond a set of attributes and functions to manage balance, ownership, and transfer, NFTs implementations contain metadata fields that allow the developers to include additional context to the digital assets they create. These are simple mappings of NFT identifiers to metadata URIs residing somewhere in the public space. It makes NFTs dependent on public APIs and challenges the advantage of them residing on the blockchain, as any digital asset that oraclises itself (points to or depends on external context outside of the distributed ledger) violates the trust bestowed in the blockchain smart contract. An NFT representing Guernica would have its identifier (almost) always intact, but the external API that provides additional data can be easily replaced or simply switched off.

During the crypto kitties craze, the need for ERC-721 based non-fungible tokens depicting kittens jammed up the Ethereum network close to its theoretical capacity. ERC-721does not support batch transfers; whenever an action on the token is taken, it is processed in a separate transaction. Executing actions on 1,000 NFT tokens effectively results in 1000 isolated transactions being run on the Ethereum network. Ultimately, this makes working with NFTs difficult to scale and not very cost-effective for actors that own and manage them in large numbers. There are other NFT standards that blockchain developers consistently iterate on, attempting to address some of its shortcomings (including batch transfers), but at the moment none is as battle-proven and as widely used as the ERC-721. NFTs have found their niche notably in representing digital objects that have no physical counterparts. One of the most popular use cases, where non-fungible tokens are used in coherence with their design goals, is digital art. Afterall, NFT tokens are not a bad invention on their own, however, using them means compromising on the shortcomings they come with. Ultimately, anyone can mint (create) an NFT token, map it to any object and claim its uniqueness. There doesn’t seem to be any consistent mechanism or solution available to effectively counter this.

The need for decentralised identifiers (aIDs) in the art world

Layering data bricks: DIDs and DDOs

There is an urgent need in the art ecosystem for a new type of digital identifier (the aID) that will unify how we define, store and work with art objects and their immanent metadata across multiple domains. In Part 2 we established that such a solution should fill a certain set of conditions. All of them can be met by an emerging type of tech - decentralised identifiers (DIDs).

DIDs can be understood as identifiers for digital identities not dependent on any form of central governance and being always under full control of their respective owners. When implemented correctly, they are positioned at the very bottom layer of emerging decentralised network architectures and considered to be the smallest unit of verifiable and independently resolvable information (there is no need for a third party to resolve them).

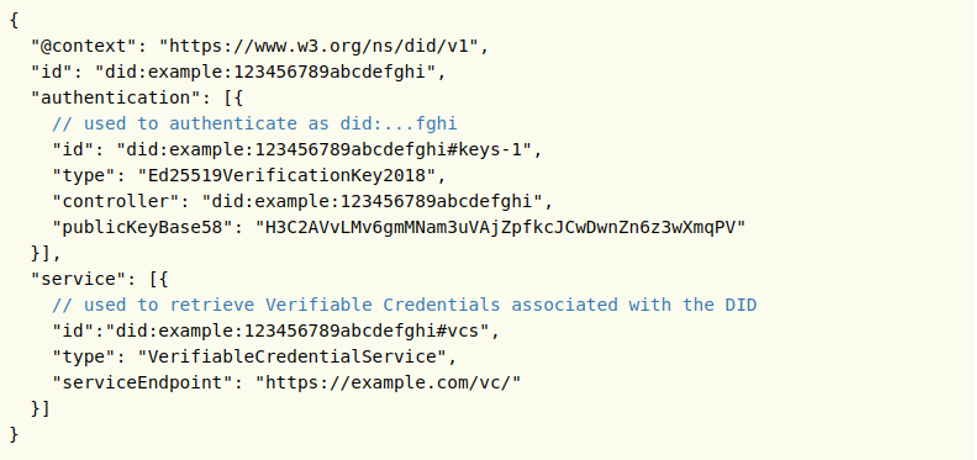

DIDs can be resolved to point to descriptor objects (DDOs), which are plain JSON-LD objects containing the machine-readable data of entities associated with the identifier. By default, everyone is able to resolve a DID and its key-value structure makes it easy to code against. There is also some subtlety to the term identity, as DIDs can be easily extrapolated to assign independently verifiable identifiers to places, usernames, events, organisations, or physical objects; literally everything that is verbose and descriptive, making them a perfect choice for digitally twinning artworks.

A correctly implemented decentralised identifier has the following structure:

did:[did method]:[did method specific string]

For example:

did:aid:mm5rdglrrzzdz20xr1luqmfhs0fncjc2dvk3avnlefvrcvg=

The method is usually a short string that acts as a namespace for the DID and points to a definition of behaviour defined in the associated method specification. It tells the system actor how to read and write a decentralised identifier (and resolve its associated descriptor objects) on a specific distributed ledger. The unique method specific string can be multi-part and support additional parameters (including query strings) and acts as a pointer to the actual document containing the metadata.

Each document contains public keys, public claims made by the identifier, service endpoint definitions used for interop system interactions and timestamps for auditability. The documents containing the metadata can be registered both on or off-ledger, leaving some headroom to tiered approaches when designing trust-based platforms. An opportunity of assigning both open and private data to the identifier is very convenient in the context of artworks, due to the secretive nature of parts of the art market.

Verifiable claims for flexible interaction between parties

Another important aspect of decentralised identifiers is verifiable claims. DIDs support the notion of claims-based identity where interaction with identities happens through verification of claims. A claim is simply a statement that one party (or action) makes about itself or about another party (or action). Here, verifiable claims consist of three parts: the subject of the claim (literally anything descriptive), the issuing party, and the claim itself, which is usually a statement about the subject. A verifiable claim happens when an issuer makes a claim about a subject, which is cryptographically signed by the issuing party. From a technical point of view, claims are JSON documents with well-specified data models, but the details of exchanging them between actors (claim issuers, claim holders, and actors that verify them) are left to protocol designers and system implementers (an example of on-going work in this area can be read here).

A tangible example would be more fitting - let’s assume that an art broker would like to put an artwork to an auction, and the auction house would like to know some specific detail about the artwork provenance. The broker obtains a verifiable claim from a renown art expert (or estate), which states that the information provided by the broker is correct. The broker downloads the statement from the art expert and uploads it to the auction house, who then accepts the claim and allows (or disallows) the broker to put the artwork for sale.

What is important to understand here is the flexibility of modeling actors and actions involved in the interactions. Any descriptive claim can be created, fit into a secure and confidential distributed network and associated with a decentralised identifier. Then, it can easily become a subject of cryptographically verifiable information exchange. We believe the potential use cases of this DID property are abundant in the art ecosystem.

The aiD network

Centralised systems are a form of monolithic governance, easy to maintain, prone to failure and suffering from the inability to scale. Actors working in decentralised systems work in the context of no central ordering authority, are equally privileged and thrive in achieving value through collaborative goals.

As suggested in previous paragraphs, the network for persistent aiDs should be decentralised, distributed, sustainable and free from any form of central authority. The following characteristics should be also considered while designing such a solution:

- Mitigations against network locality incidents, where state of information is not equally distributed across peers

- Mitigations against internal and external network attack vectors

- End-to-end encryption for inter-node communication, including digitally signed messaging

- Encryption of data at motion and at rest

- Reputation schemes for authentication and authorisation, which penalise hostile actors

- Governance by distributed consensus models and algorithms

- Cryptography first

Distributed digital ledgers satisfy all the necessary criteria and act as a promising vessel for DID implementations. However, the ledger itself does not sanitise all the endpoints, as a complete solution should have wallets intended for key management, DID resolvers (including bridging to a universal one), attestation APIs and other components developed against the immutable DID store.

What next?

By default, DIDs are ledger agnostic. DIDs can be implemented with almost any distributed ledger technology, the one requirement being compliance with W3C specifications. The ERC-725 is a well-established standard for decentralised identifiers built on top of the Ethereum blockchain. Keeping in mind the possible disadvantages of using a public blockchain as the technical backbone of the AIS (aID), a step forward would be to implement an ERC-725 + ERC-735 based proof-of-concept accompanied by resolver, claim verifier and a simple API to consume for application clients. Lessons learned from such exercise would allow us to work on more fitting, federated blockchain solutions, running on network nodes not entirely dependent on public actors.

There is no quick win - share our vision

At Arteïa we would like to disincentive thinkers from reaching the low-hanging fruits and instead inspire everyone to build an innovative and fair network for art. It will take longer but will secure a more fit-for-purpose and sustainable outcome: considering the scale of the expected benefits for the art ecosystem, it is deemed worth the effort and patience.

Using emerging types of digital identifiers built on top of a distributed decentralised ledger further solidifies this direction. Identifiers that carry the context of the data they point to, are easily resolvable at any point in time, cryptographically verifiable from the ground up, and globally unique. The technology-enhanced consensus on the origin and correctness of art records should be driven by the common effort of the collective and be susceptible to verification by all actors in the value chain.

References:

https://w3c-ccg.github.io/did-spec/

https://www.w3.org/TR/vc-data-model

https://tools.ietf.org/html/rfc4122

https://www.w3.org/TR/json-ld11/

https://www.w3.org/2017/vc/WG/

https://ssimeetup.org/http://www.weboftrust.info/papers.html

https://erc725alliance.org/

https://uniresolver.io/